John Zielinski

composer - saxophone - flute - synth - voice

I Know What I Like (and I Like What I Know)

This was published in June of 2019 in Central Standard Time. In the days since the pandemic of 2020 started many people have been stuck at home and binging on electronic media. Not surprisingly (to me), a number of those people have expressed increasing dissatisfaction with what's been pumped out. Perhaps this explains it.

Have you ever considered why you like the things that you like? I’ve thought about this many times and it was brought to mind recently while having a conversation with someone about the latest blockbuster movie. The other person really enjoyed it, but I was nowhere near as enthusiastic. This was despite the fact that we’re often in agreement in our assessment of films. Maybe you’ve had similar experiences.

Each of us has a unique set of preferences. Think about your preferences in music. You probably have more fondness for one genre or style than you do for others. Within a single genre you likely prefer one composer’s or songwriter’s work over another. Even within a single creator’s body of work, you prefer some pieces over others. You know that this is true, but why is it true?

When I was at university as a music major studying composition in the 1970s, one of my professors introduced me to information theory. Claude Shannon, the author of the 1948 paper A Mathematical Theory of Communication that effectively introduced information theory, may well be considered the father of the digital age. The impact of information theory, though, goes well beyond the digital domain as it deals with the transmission of messages.

Many – perhaps most – people treat the words “data” and “information” as interchangeable. They’re really not. Data (the plural of datum) is raw. It exists but has no significance apart from that existence. Information, on the other hand, is data that has been given meaning. That meaning may or may not be useful, but it exists, nonetheless. “Girl,” “boy,” “the,” and “sees” are data. Compare that list with, “The boy sees the girl.”

About 20 years after I received my degree, I was working on computer-based pattern recognition. This is a subset of artificial intelligence (AI). One of the key goals was performance optimization. An algorithm that takes 3 days to identify a pattern is much less useful than one that can identify the same pattern in less than 1 second. A cognitive psychologist with whom I was acquainted at the time asserted that human beings recognize patterns (and learn) at the fringes of what they already know. This takes us back to the difference between data and information.

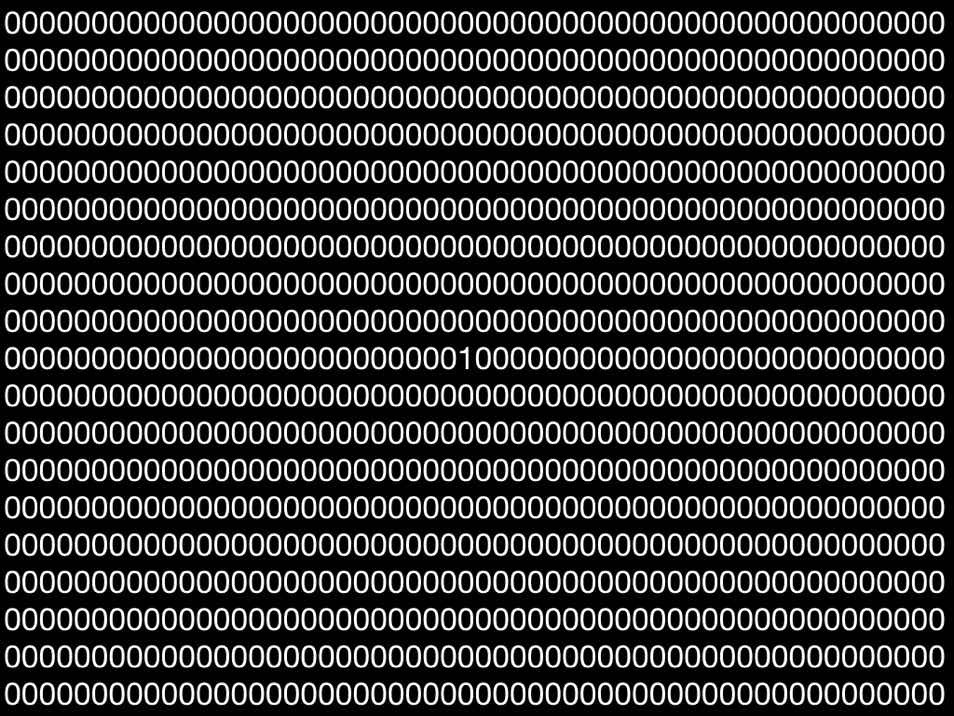

Consider a long string of zeroes and ones. How long a string? How about 1 million digits. That’s a lot of data. It takes up quite a bit of space and it takes time to transmit from a sender to a receiver. Now, imagine 2 different versions of that string. The first consists of 1,000,000 zeroes. The second consists of 500,000 zeroes followed by a single one followed by 499,999 more zeroes. The complete redundancy of the first string makes the probability that it has meaning approach 0%. (It’s possible that it carries the meaning, “I have no meaning,” but that’s a stretch.) The presence of that single one in the second string, however, has the ability to create meaning. Why is it there? What does it indicate?

If we translate the strings into a simpler, compressed form we can reduce the amount of space required to hold them, reduce the amount of time it takes to transmit them, and make it easier to spot the possibility of meaning. Here’s a basic translation.

- 1,000,000 (0)

- 500,000 (0) > 1 (1) > 499,999 (0)

Although these are trivial examples, they demonstrate the essence of digital compression even though the actual implementations vary.

In my AI work one aspect was related to developing a large enough repository of patterns so that the software could quickly compare those to the data that it was trying to evaluate. This allowed the software to quickly identify some patterns and dedicate its resources to those that didn’t exactly match anything on file. It had to “think” more about the patterns that were different.

At this point you may well be saying, “What’s any of this got to do with my preferences?” Let’s circle back.

If humans truly recognize patterns at the fringes of what they know, then both what we know and how much we know are really important. Let’s stick with music as we move forward.

If you lived in the United States or western Europe sometime in the last 100 years, I’d be willing to wager that nearly all of the music that you had heard during that time was based on equal temperament. As a result, there are only 12 pitches that can be used. On top of that, the music was probably rooted in the major-minor system. This system puts constraints on what pitch may follow another when spinning out a melody. If the sequence of notes is short enough it becomes difficult to tell the difference between My Sweet Lord and He’s So Fine. As a matter of fact, there may actually be no difference. As a melodic line gets longer, though, the probability of it being an exact match gets smaller.

While a melody that’s substantially similar to something with which you’re already familiar has little to offer in terms of new information, something that’s totally foreign has no touchpoints for the listener. You’d likely need to expend quite a bit of effort to penetrate the information and get to the meaning. Based on that, I’d expect you to be more receptive to George Gershwin’s I’ve Got Rhythm (even if it’s not your usual cup of tea) than to Alban Berg’s opera Lulu or Arnold Schoenberg’s Pierrot Lunaire. This potentially explains affinity for a certain genre.

So, if the lack of similarity between one piece of music and another may explain the amount of information that needs to be processed and the rejection of the piece that requires too much processing, how might this explain rejecting a piece of music that’s similar to another and, thus, requires less processing. Once again, it comes to the fringe of what we know and the amount of information present.

How interesting is it to listen to an audio sine wave? I’m guessing that if you visited the target of that link you were probably not enraptured and didn’t even listen to the full 30 seconds. That recording conveyed no information to you. While the threshold of boredom is surely different, listening to a song that’s much too close to other songs that you’ve heard may result in there being little to no novel information being transmitted. This could explain why attempts to copy the sound of a highly successful popular song often fail. Consciously or unconsciously, listeners respond with, “Eh. I’ve heard it before.” By extension, this may explain why listeners burn out on a particular artist or style. As the reference base increases in size it becomes more and more difficult to transmit enough information to keep things interesting. A human’s reference base, though, is much different from a computer’s.

Computers have the ability to manage huge reference repositories without degradation over long periods of time. Human memory isn’t nearly that good. After years of listening to the songs on a particular album you may bore of them and put the album on the shelf. A couple of years later you think, “I haven’t heard this in a while. Let me give it a spin.” As the sound spills out of the speakers you have a renewed interest in what you’re hearing. Does this disprove what I’ve said earlier? Maybe and maybe not.

Over time memories fade. As one research paper from the National Institutes of Health noted, “The ‘imperfection’ of memory has been known since the first empirical memory experiments by Ebbinghaus, whose famous ‘forgetting curve’ revealed that people are unable to retrieve roughly 50% of information one hour after encoding. In addition to simple forgetting, memories routinely become distorted.” Given enough time without exposure, then, the memory of a piece of music ends up being different than the piece of music. When it’s rediscovered the comparison to what was known and has been lost or distorted may drive up the information rate and make the piece interesting once again.

So far, I’ve focused on information in music, but it should be easy to see how this plays out in other areas such as novels, films, television, and theater. Have you ever tried reading all the works of Dickens or Stephen King one immediately after the other? How long did it take before you got bored and gave up? Watch enough superhero action films and the next one likely won’t excite you. See enough versions and episodes of CSI: City Name Here and the latest will be just more of the same. You may have fallen on the floor laughing the first time that you saw Robin Williams explaining golf, but did you laugh as hard the second time? Did you laugh at all the 10th time?

This piece has focused on data and information in the arts and entertainment. What’s worth keeping in mind is that many of these ideas may be useful in other areas. Perhaps the two most important things are these. First, we match patterns and learn at the fringes of what we already know. The upshot of this is that if someone wants to more easily recognize patterns and learn new things, then that person should work on continuously expanding their personal repository. Every new thing that you learn will help make it easier to learn the next thing. Second, learn to quickly spot and tell the difference between data streams that contain low levels of information and those that contain high levels of information. This skill will help you to plow through more data more quickly by deciding what deserves your attention and what doesn’t. In the contemporary world of rapid and often unpredictable change this will serve you well.

Credit where credit is due: The title of this piece was inspired by the lyrics of the song I Know What I Like (In Your Wardrobe) by Genesis.

This article © 2019 by John Zielinski

all rights reserved